Funding for Agri-food Data Canada is provided in part by the Canada First Research Excellence Fund

Semantic Engine

In research and data-intensive environments, precision and clarity are critical. Yet one of the most common sources of confusion—often overlooked—is how units of measure are written and interpreted.

Take the unit micromolar, for example. Depending on the source, it might be written as uM, μM, umol/L, μmol/l, or umol-1. Each of these notations attempts to convey the same concentration unit. But when machines—or even humans—process large amounts of data across systems, this inconsistency introduces ambiguity and errors.

The role of standards

To ensure clarity, consistency, and interoperability, standardized units are essential. This is especially true in environments where data is:

-

Shared across labs or institutions

-

Processed by machines or algorithms

-

Reused or aggregated for meta-analysis

-

Integrated into digital infrastructures like knowledge graphs or semantic databases

Standardization ensures that “1 μM” in one dataset is understood exactly the same way in another and this ensures that data is FAIR (Findable, Accessible, Interoperable and Reusable).

UCUM: Unified Code for Units of Measure

One widely adopted system for encoding units is UCUM—the Unified Code for Units of Measure. Developed by the Regenstrief Institute, UCUM is designed to be unambiguous, machine-readable, compact, and internationally applicable.

In UCUM:

-

micromolar becomes

umol/L -

acre becomes

[acr_us] -

milligrams per deciliter becomes

mg/dL

This kind of clarity is vital when integrating data or automating analyses.

UCUM doesn’t include all units

While UCUM covers a broad range of units, it’s not exhaustive. Many disciplines use niche or domain-specific units that UCUM doesn’t yet describe. This can be a problem when strict adherence to UCUM would mean leaving out critical information or forcing awkward approximations. Furthermore, UCUM doesn’t offer and exhaustive list of all possible units, instead the UCUM specification describes rules for creating units. For the Semantic Engine we have adopted and extended existing lists of units to create a list of common units for agri-food which can be used by the Semantic Engine.

Unit framing overlays of the Semantic Engine

To bridge the gap between familiar, domain-specific unit expressions and standardized UCUM representations, the Semantic Engine supports what’s known as a unit framing overlay.

Here’s how it works:

-

Researchers can input units in a familiar format (e.g.,

acreoruM). -

Researchers can add a unit framing overlay which helps them map their units to UCUM codes (e.g.,

"[acr_us]"or"umol/L"). -

The result is data that is human-friendly, machine-readable, and standards-compliant—all at the same time.

This approach offers the both flexibility for researchers and consistency for machines.

Final thoughts

Standardized units aren’t just a technical detail—they’re a cornerstone of data reliability, semantic precision, and interoperability. Adopting standards like UCUM helps ensure that your data can be trusted, reused, and integrated with confidence.

By adopting unit framing overlays with UCUM, ADC enables data documentation that meet both the practical needs of researchers and the technical requirements of modern data infrastructure.

Written by Carly Huitema

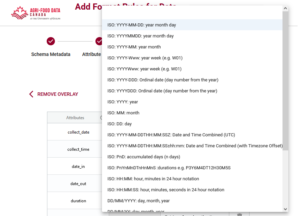

When designing a data schema, you’re not only choosing what data to collect but also how that data should be structured. Format rules help ensure consistency by defining the expected structure for specific types of data and are especially useful for data verification.

For example, a date might follow the YYYY-MM-DD format, an email address should look like name@example.com, and a DNA sequence may only use the letters A, T, G, and C. These rules are often enforced using regular expressions or standardized format types to validate entries and prevent errors. Using the Semantic Engine, we have already described how users can select format rules for data input. Now we introduce the ability to add custom format rules for your data verification.

Format rules are in Regex

Format rules that are understood by the Semantic Engine are written in a language called Regex.

Regex—short for regular expressions—is a powerful pattern-matching language used to define the format that input data must follow. It allows schema designers to enforce specific rules on strings, such as requiring that a postal code follow a certain structure or ensuring that a genetic code only includes valid base characters.

For example, a simple regex for a 4-digit year would be:^\d{4}$

This means the value must consist of exactly four digits.

Flavours of Regex

While regex is a widely adopted standard, it comes in different flavours depending on the programming language or system you’re using. Common flavours include:

-

PCRE (Perl Compatible Regular Expressions) – used in PHP and many other systems

-

JavaScript Regex – the flavour used in browsers and front-end validation

-

Python Regex (re module) – similar to PCRE with some minor syntax differences

-

POSIX – a more limited, traditional regex used in Unix tools like

grepandawk

The Semantic Engine uses a flavor of regex aligned with JavaScript-style regular expressions, so it’s important to test your patterns using tools or environments that support this style.

Help writing Regex

Regex is notoriously powerful—but also notoriously easy to get wrong. A misplaced symbol or an overly broad pattern can lead to incorrect validation or unexpected matches. You can use online tools such as ChatGPT and other AI assistants to help start writing and understanding your regex. You can also put in unknown Regex expressions and get explanations using an AI agent.

You can also use other online tools such as:

The Need for Testing

It’s essential to test your regular expressions before using them in your schema. Always test your regex with both expected inputs and edge cases to ensure your data validation is reliable and robust. With the Semantic Engine you can export a schema with your custom regex and then use it with a dataset with the data verification tool to test your regex.

By using regex effectively, the Semantic Engine ensures that your data conforms to the exact formats you need, improving data quality, interoperability, and trust in your datasets.

Written by Carly Huitema

When you’re building a data schema you’re making decisions not only about what data to collect, but also how it should be structured. One of the most useful tools you have is format restrictions.

What Are Format Entries?

A format entry in a schema defines a specific pattern or structure that a piece of data must follow. For example:

- A date must look like YYYY-MM-DD or be in the ISO duration format.

- An email address must have the format name@example.com

- A DNA sequence might only include the letters A, T, G, and C

These formats are usually enforced using rules like regular expressions (regex) or standardized format types.

Why Would You Want to Restrict Format?

Restricting the format of data entries is about ensuring data quality, consistency, and usability. Here’s why it’s important:

✅ To Avoid Errors Early

If someone enters a date as “15/03/25” instead of “2025-03-15”, you might not know whether that’s March 15 or March 25 and what year? A clear format prevents confusion and catches errors before they become a problem.

✅ To Make Data Machine-Readable

Computers need consistency. A standardized format means data can be processed, compared, or validated automatically. For example, if every date follows the YYYY-MM-DD format, it’s easy to sort them chronologically or filter them by year. This is especially helpful for sorting files in folders on your computer.

✅ To Improve Interoperability

When data is shared across systems or platforms, shared formats ensure everyone understands it the same way. This is especially important in collaborative research.

Format in the Semantic Engine

Using the Semantic Engine you can add a format feature to your schema and describe what format you want the data to be entered in. While the schema writes the format rule in RegEx, you don’t need to learn how to do this. Instead, the Semantic Engine uses a set of prepared RegEx rules that users can select from. These are documented in the format GitHub repository where new format rules can be proposed by the community.

After you have created format rules in your schema you can use the Data Entry Web tool of the Semantic Engine to verify your results against your rules.

Final Thoughts

Format restrictions may seem technical, but they’re essential to building reliable, reusable, and clean data. When you use them thoughtfully, they help everyone—from data collectors to analysts—work more confidently and efficiently.

Written by Carly Huitema

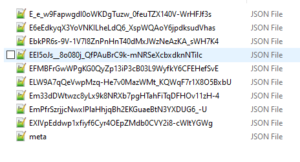

If you have been already using the Semantic Engine to write your schemas you will have come across the .zip schema bundle. This is the machine-readable version of your schema written in JSON where each component of you schema is a separate file inside a .zip folder.

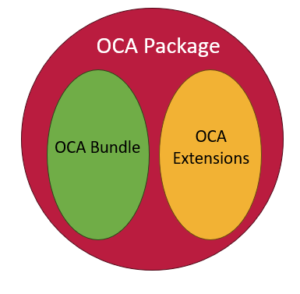

Overlays Capture Architecture has transitioned now to a schema package that has the same content with a few extra pieces of information and a new way to extend the functionalities of schemas. First, rather than each component of the schema being in a separate file inside a .zip, they are listed one after another inside a single .json file. The content of each schema component is the same and exists as the schema bundle. We found .zips were a problem for our Mac users especially and they were also challenging to include in repositories. The change to a single JSON object will address these challenges.

Second, ADC has extended the functionality of OCA to cover use cases that aren’t included in the original specification such as attribute ordering. These extra overlays follow the same syntax and structure of OCA and are in a second object called extensions. Both the OCA bundle and its extensions are included in a JSON file called OCA Package. You can read more about this update on the OCA Package specification.

Because OCA uses derived identifiers, this structure means we can give a derived identifier to the OCA Bundle which contains standard, well specified overlays. We also give researchers and developers flexibility to add their own functionalities via the OCA extensions where new additions won’t change the core bundle derived identifier. Finally, the entire OCA Package is given a derived identifier thus binding all the content together. When you reference your OCA schema you should use the Package identifier.

One of the first extensions we have added to an OCA schema is the ability to keep ordering of attributes. You may have noticed that in an OCA schema all the attributes are ordered alphabetically. Now with the ordering overlay we support user-entered ordering and this functionality is included now in all Semantic Engine tools.

The Semantic Engine can continue to consume and use .zip schema bundles but now the default will be to export .json schema packages. The .json packages will continue to be developed and so if you are using any .zip schema bundles you can open them using the Semantic Engine and export them to obtain the .json version.

Written by Carly Huitema

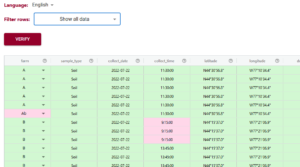

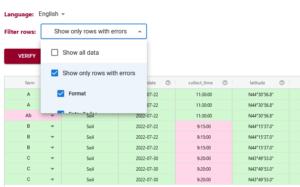

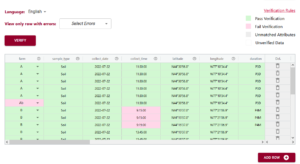

Using the Semantic Engine you can enter both your data and your schema and compare your schema against the rules of your schema. This is useful for data verification and the tool is called Data Entry Web (DEW).

When you use the DEW tool all your data will be verified and the different cells coloured red or green depending if they match the rules set out in the schema or not.

The filtering tool of DEW has been improved to help users more easily find which data doesn’t pass the schema rules. This can be very helpful when you have very large datasets. Now you can filter your data and only shows those rows that have errors. You can even filter further and specify which types of errors you want to look at.

Once you have identified your rows that have errors you can correct them within the DEW tool. After you have corrected all your errors you can verify your data again to check that all corrections have been applied. Then you can export your data and continue on with your analysis.

Written by Carly Huitema

Understanding Data Requires Context

Data without context is challenging to interpret and utilize effectively. Consider an example: raw numbers or text without additional information can be ambiguous and meaningless. Without context, data fails to convey its full value or purpose.

By providing additional information, we can place data within a specific context, making it more understandable and actionable – more FAIR. This context is often supplied through metadata, which is essentially “data about data.” A schema, for instance, is a form of metadata that helps define the structure and meaning of the data, making it clearer and more usable.

The Role of Schemas in Contextualizing Data

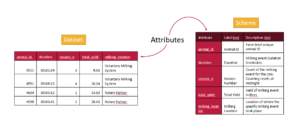

A data schema is a structured form of metadata that provides crucial context to help others understand and work with data. It describes the organization, structure, and attributes of a dataset, allowing data to be more effectively interpreted and utilized.

A well-documented schema serves as a guide to understanding the dataset’s column labels (attributes), their meanings, the data types, and the units of measurement. In essence, a schema outlines the dataset’s structure, making it accessible to users.

For example, each column in a dataset corresponds to an attribute, and a schema specifies the details of that column:

- Units: What units the data is measured in (e.g., meters, seconds).

- Format: What format the data should follow (e.g., date formats).

- Type: Whether the data is numerical, textual, boolean etc.

The more features included in a schema to describe each attribute, the richer the metadata, and the easier it becomes for users to understand and leverage the dataset.

Writing and Using Schemas

When preparing to collect data—or after you’ve already gathered a dataset—you can enhance its usability by creating a schema. Tools like the Semantic Engine can help you write a schema, which can then be downloaded as a separate file. When sharing your dataset, including the schema ensures that others can fully understand and use the data.

Reusing and Extending Schemas

Instead of creating a new schema for every dataset, you can reuse existing schemas to save time and effort. By building upon prior work, you can modify or extend existing schemas—adding attributes or adjusting units to align with your specific dataset requirements.

One Schema for Multiple Datasets

In many cases, one schema can be used to describe a family of related datasets. For instance, if you collect similar data year after year, a single schema can be applied across all those datasets.

Publishing schemas in repositories (e.g., Dataverse) and assigning them unique identifiers (such as DOIs) promotes reusability and consistency. Referencing a shared schema ensures that datasets remain interoperable over time, reducing duplication and enhancing collaboration.

Conclusion

Context is essential to making data understandable and usable. Schemas provide this context by describing the structure and attributes of datasets in a standardized way. By creating, reusing, and extending schemas, we can make data more accessible, interoperable, and valuable for users across various domains.

Written by Carly Huitema

Using an ontology in agri-food research provides a structured and standardized way to manage the complex data that is common in this field. Ontologies are an important tool to improve data FAIRness.

Ontologies define relationships between concepts, allowing researchers to organize information about crops, livestock, environmental conditions, agricultural practices, and food systems in a consistent manner. This structured approach ensures that data from different studies, regions, or research teams can be easily integrated and compared, helping with collaboration and knowledge sharing across the agri-food domain.

One key advantage of ontologies in agri-food research is their ability to enable semantic interoperability. By using a shared vocabulary and a defined set of relationships, researchers can ensure that the meaning of data remains consistent across different systems and databases. For example, when studying soil health, an ontology can define related terms such as soil type, nutrient content, and pH level, ensuring that these concepts are understood uniformly across research teams and databases.

Moreover, ontologies allow for enhanced data analysis and discovery. They support advanced querying, reasoning, and the ability to infer new knowledge from existing data. In agri-food research, where data is often generated from diverse sources such as satellite imaging, field sensors, and lab experiments, ontologies provide a framework to draw connections between different datasets, leading to insights into food security, climate resilience, and sustainable agriculture.

Agri-food Data Canada is working to make it easier to incorporate ontologies into research by developing tools to help incorporate ontologies into research data.

One way you can connect ontologies to your research data is through Entry Codes (aka pick lists) of a data schema. By limiting entries for a specific attribute (aka variables or data columns) to a selected list drawn from an ontology you can be sure to use terms and definitions that a community has already established. Using the Data Entry Web tool of the Semantic Engine you can verify that your data uses only allowed terms drawn from the entry code list. This helps maintain data quality and ensures it is ready for analysis.

There are many places to find ontologies as a source for terms, the organization CGIAR has published a resource of common Ontologies for agriculture.

Agri-food Data Canada is continuing to develop ways to more easily incorporate standard terms and ontologies into researcher data, helping improve data FAIRness and contributing to better cross-domain data integration.

Written by Carly Huitema

My last post was all about where to store your data schemas and how to search for them. Now let’s take it to the next step – how do I search for what’s INSIDE a data schema – in other words how do I search for the variables or attributes that someone has described in their data schema? A little caveat here – up to this point, we have been trying to take advantage of National data platforms that are already available – how can we take advantage of these with our services? Notice the words in that last statement “up to this point” – yes that means we have some new options and tools coming VERY soon. But for now – let’s see how we can take advantage of another National data repository odesi.ca.

Findable in the FAIR principles?

How can a data schema help us meet the recommendations of this principle? Well…. technically I showed you one way in my last post – right? Finding the data schema or the metadata about our dataset. But let’s dig a little deeper and try another example using the Ontario Dairy Research Centre (ODRC) data schemas to find the variables that we’re measuring this time.

As I noted in my last post there are more than 30 ODRC data schemas and each has a listing of the variables that are being collected. As a researcher who works in the dairy industry – I’m REALLY curious to see WHAT is being collected at the ODRC – by this I mean – what variables, measures, attributes. But, when I look at the README file for the data schemas in Borealis, I have to read it all and manually look through the variable list OR use some keyboard combination to search within the file. This means I need to search for the data schema first and then search within all the relevant data schemas. This sounds tedious and heck I’ll even admit too much work!

BUT! There is another solution – odesi.ca – another National data repository hosted by Ontario Council of University Libraries (OCUL) that curates over 5,700 datasets, and has recently incorporated the Borealis collection of research data. Let’s see what we can see using this interface.

Let’s work through our example – I want to see what milking variables are being used by the ODRC – in other words, are we collecting “milking” data? Let’s try it together:

- Visit odesi.ca

- To avoid the large number of results from any searches, let’s start by restricting our results to the Borealis entries – Under Collection – Uncheck Select All – Select Borealis Research Data

- In the Find Data box type: milking

- Since we are interested in what variables that collect milking data – change Anywhere to Variable

- Click Search

- I see over 20 results – notice that there are Replication dataset results along with the data schema results. The Replication dataset entries refer to studies that have been conducted and whose data has been uploaded and available to researchers – this is fabulous – it shows you how previous projects have also collected milking data.

For our purposes – let’s review the information related to the ODRC Data Schema entries. Let’s pick the first one on my list ODRC data schema: Tie stalls and maternity milkings. Notice that it states there is one Matching Variable? It is the variable called milking_device. If you select the the data schema you will see all the relevant and limited study level metadata along with a DOI for this schema. By selecting the variable you will also see a little more detail regarding the chosen attribute.

NOTE – there is NO data with our data schemas – we have added dummy data to allow odesi.ca to search at a variable level, but we are NOT making any data available here – we are using this interface to increase the visibility of the types of variables we are working with. To access any data associated with these data schemas, researchers need to visit the ODRC website as noted in the associated study metadata.

I hope you found this as exciting as I do! Researchers across Canada can now see what variables and information is being collected at our Research Centres – so cool!!

Look forward to some more exciting posts on how to search within and across data schemas created by the Semantic Engine. Go try it out for yourself!!!

![]()

With the introduction of using OCA schemas for data verification let’s dig a bit more into the format overlay which is an important piece for data verification.

When you are writing a data schema using the Semantic Engine you can build up your schema documentation by adding features. One of the features that you can add is called format.

In an OCA (Overlays Capture Architeccture) schema, you can specify the format for different types of data. This format dictates the structure and type of data expected for each field, ensuring that the data conforms to certain predefined rules. For example, for a numeric data type, you can define the format to expect only integers or decimal numbers, which ensures that the data is valid for calculations or further processing. Similarly, for a text data type, you can set a format that restricts the input to a specific number of characters, such as a string up to 50 characters in length, or constrain it to only allow alphanumeric characters. By defining these formats, the OCA schema provides a mechanism for validating the data, ensuring it meets the expected requirements.

Specifying the format for data in an OCA schema is valuable because it guarantees consistency and accuracy in data entry and validation. By imposing these rules, you can prevent errors such as inputting the wrong type of data (e.g., letters instead of numbers) or exceeding field limits. This level of control reduces data corruption, minimizes the risk of system errors, and improves the quality of the information being collected or shared. When systems across different platforms adhere to these defined formats, it enables seamless data exchange and interoperability improving data FAIRness.

The rules for defining data formats in an OCA schema are typically written using Regular Expressions (RegEx). RegEx is a sequence of characters that forms a search pattern, used for matching strings against specific patterns. It allows for very precise and flexible definitions of what is considered valid data. For example, RegEx can specify that a field should contain only digits, letters, or specific formats like dates (YYYY-MM-DD) or email addresses. RegEx is widely used for input validation because of its ability to handle complex patterns and enforce strict rules on data format, making it ideal for ensuring data consistency in systems like OCA.

To help our users be consistent, the Semantic Engine limits users to a set of format rules, which is documented in the format rule GitHub repository. If the rule you want isn’t listed here it can be added by reaching out to us at ADC or raising a GitHub issue in the repository.

After you have added format rules to your data schema you can use the data verification tool to check your data against your new schema rules.

Written by Carly Huitema

When data entry into an Excel spreadsheet is not standardized, it can lead to inconsistencies in formats, units, and terminology, making it difficult to interpret and integrate research data. For instance, dates entered in various formats, inconsistent use of abbreviations, or missing values can give problems during analysis leading leading to errors.

Organizing data according to a schema—essentially a predefined structure or set of rules for how data should be entered—makes data entry easier and more standardized. A schema, such as one written using the Semantic Engine, can define fields, formats, and acceptable values for each column in the spreadsheet.

Using a standardized Excel sheet for data entry ensures uniformity across datasets, making it easier to validate, compare, and combine data. The benefits include improved data quality, reduced manual cleaning, and streamlined data analysis, ultimately leading to more reliable research outcomes.

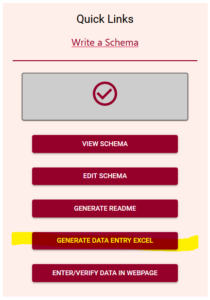

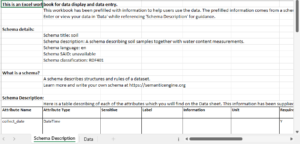

After you have created a schema using the Semantic Engine, you can use this schema (the machine-readable version) to generate a Data Entry Excel.

When you open your Data Entry Excel you will see it consists of two sheets, one for schema description and one for the entry for data. The schema description sheets takes information from the schema that was uploaded and puts it into an information table.

At the very bottom of the information table are listed all of the entry code lists from the schema. This information is used on the data entry side for populating drop-down lists.

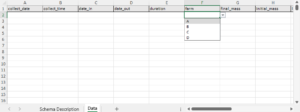

On the data entry sheet of the Data Entry Excel you can find the pre-labeled columns for data entry according to the rules of your schema. You can rearrange the columns as you want, and you can see that the Data Entry Excel comes with prefilled dropdown lists from those variables (attributes) that have entry codes. There is no dropdown list if the data is expected to be an array of entries or if the list is very long. As well, you will need to wrestle with Excel time/date attributes to have it appear according to what is documented in the schema description.

There is no verification of data in Excel that is set up when you generate your Data Entry Excel apart from the creation of the drop-down lists. For data verification you can upload your Data Entry Excel to the Data Verification tool available on the Semantic Engine.

Using the Data Entry Excel feature lets you put your data schemas to use, helping you document and harmonize your data. You can store your data in Excel sheets with pre-filled information about what kind of data you are collecting! You can also use this to easily collect data as part of a larger project where you want to combine data later for analysis.

Written by Carly Huitema