Semantic Engine: Range verification

Streamlining Data Documentation in Research

In of research, data documentation is often a complex and time-consuming task. To help researchers better document their data ADC has created the Semantic Engine as a powerful tool for creating structured, machine-readable data schemas. These schemas serve as blueprints that describe the various features and constraints of a dataset, making it easier to share, verify, and reuse data across projects and disciplines.

Defining Data

By guiding users through the process of defining their data in a standardized format, the Semantic Engine not only improves data clarity but also enhances interoperability and long-term usability. Researchers can specify the types of data they are working with, the descriptions of data elements, units of measurement used, and other rules that govern their values—all in a way that computers can easily interpret.

Introducing Range Overlays

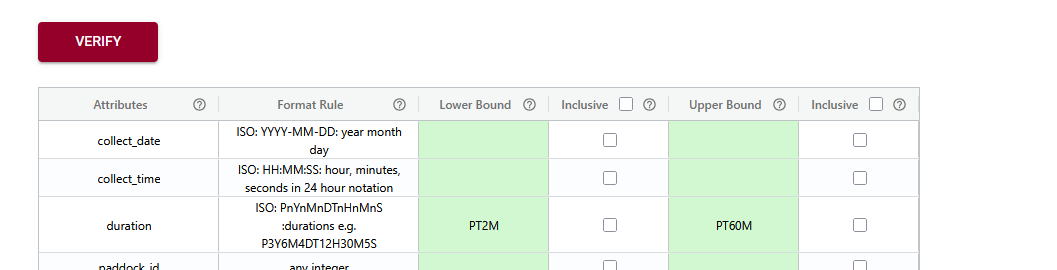

With the next important update, the Semantic Engine now includes support for a new feature: range overlays.

Range overlays allow researchers to define expected value ranges for specific data fields, and if the values are inclusive or exclusive (e.g. up to but not including zero). This is particularly useful for quality control and verification. For example, if a dataset is expected to contain only positive values—such as measurements of temperature, population counts, or financial figures—the range overlay can be used to enforce this expectation. By specifying acceptable minimum and maximum values, researchers can quickly identify anomalies, catch data entry errors, and ensure their datasets meet predefined standards.

Verifying Data

In addition to enhancing schema definition, range overlay support has now been integrated into the Semantic Engine’s Data Verification tool. This means researchers can not only define expected value ranges in their schema, but also actively check their datasets against those ranges during the verification process.

When you upload your dataset into the Data Verification tool—everything running locally on your machine for privacy and security—you can quickly verify your data within your web browser. The tool scans each field for compliance with the defined range constraints and flags any values that fall outside the expected bounds. This makes it easy to identify and correct data quality issues early in the research workflow, without needing to write custom scripts or rely on external verification services.

Empowering Researchers to Ensure Data Quality

Whether you’re working with clinical measurements, survey responses, or experimental results, this feature lets you to catch outliers, prevent errors, and ensure your data adheres to the standards you’ve set—all in a user-friendly interface.

Written by Carly Huitema